dispersion of the values of a random variable around its expected value

Videos

The answer by erikasan is correct if all of your measurements have the same uncertainty. However, if all of your measurements have the same uncertainty, you might as well put all $25\times 10 = 250$ measurements into one data set, whose mean and variance are

$$ \left<x\right> = \frac{\sum_i^M\sum_j^{N_i} x_{ij}}{\sum_i N_i} \qquad \sigma^2 = \frac{\sum_i^M\sum_j^{N_i} \left(x_{ij} - \left<x\right>\right)^2 }{\sum_i N_i} = \left<x^2\right> - \left<x\right>^2 $$

Here $i$ indexes your experiments, and $j$ indexes the measurements within each experiment. In your example you have $M=10$ experiments, with the same number of measurements $N_i=25$ in each. (For your case with $N_i = N$ for all $i$, the total population is $\sum_i N_i = MN$.) The "standard error on the mean" is

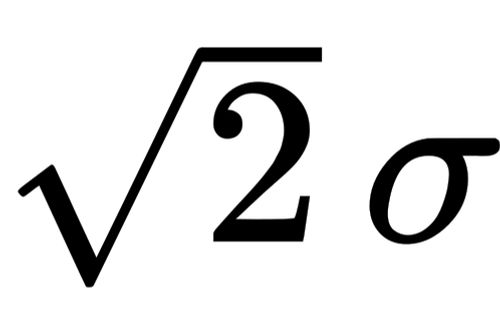

$$ \delta = \frac{\sigma}{\sqrt{\sum_i N_i}} $$

That is, the uncertainty on the mean is smaller than the uncertainty on each measurement, by the square root of the total population size. Your "result" would be "we measured $\left<x\right> \pm \delta$." (Beware of different notations in different sources. Some people use something like $\sigma_\text{s.e.m.}$ for the standard error on the mean; sometimes people will use $\sigma$ for my $\delta$ and just not talk about the population variance; sometimes people get sloppy and use $\sigma$ to mean different things in different places.)

You might instead be interested in testing the hypothesis that your measurements from different experiments are in fact all drawn from the same distribution. Perhaps you took most of your data and then fixed a noise source in your apparatus; you don't want to throw away the other data completely, but you'd like to privilege the better data. Or perhaps you are working from old notes, or from other people's published data, and the original raw data aren't accessible. In that case, for each experiment you would compute a mean, variance, and standard error on the mean:

\begin{align} \left<x_i\right> &= \sum_j^{N_i} \frac{x_{ij}}{N_i} \\ \sigma_i^2 &= \sum_j^{N_i} \frac{\left( x_{ij} - \left<x_i\right> \right)^2}{N_i} = \left<x_i^2\right> - \left<x_i\right>^2 \\ \delta_i &= \frac{\sigma_i}{\sqrt{N_i}} \end{align}

In this case the "error-weighted average" is

$$ \left<x\right> = \frac{ \sum_i^M {\delta_i^{-2}}{\left<x_i\right>} }{ \sum_i^M {\delta_i^{-2}} } $$

You can see that, if all of the $\delta_i$ are the same, this is just a straight average, but experiments with small $\delta_i$ contribute more to the average than experiments with large $\delta_i$. The uncertainty on this mean-of-means works out to obey

$$ \frac{1}{\delta^2} = \sum_i^M \frac{1}{\delta_i^2} $$

If you've done parallel resistors, you'll recognize that that $\delta$ is smaller than the smallest $\delta_i$, but including a low-precision experiment won't improve your overall precision by much. You might also notice that, for the special case where all the experiments have the same precision, this works out to $\delta = \delta_i / \sqrt M$, consistent with our definitions above.

To your specific question,

I calculate the total standard deviation by doing the square root of the quadratic sum of the standard deviation obtained in point 2.

If I'm understanding you correctly, that quadrature sum would make your combined uncertainty bigger, rather than smaller, which would defeat the purpose of doing multiple experiments. You could think of the recipe here as an "inverse quadrature sum," though that name is probably too obtuse to be useful without a formula nearby.

You have a series of random samples for a random variable. In general, each sample can have a different number or measurements and/or a different standard deviation based on the measurements. Here is one accepted approach to produce an estimated mean and standard deviation of the mean based on the series of samples.

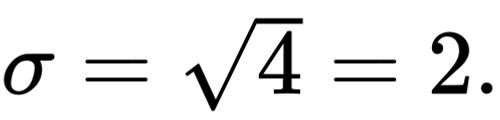

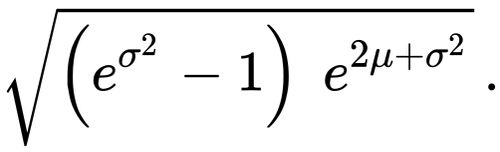

Consider a series of $i = 1, 2, ...,k$ random samples, the $i^{th}$ sample consisting of $n_i$ specific values for the random variable of concern. Each sample can have a different number of specific values. For each sample you evaluate and report the mean and the standard deviation of the mean. The mean of the $i^{th}$ sample is $m_i = {1 \over n_{i}} \sum_{j}^{n_i} y_{ji}$ where $y_{ji}$ is the $j^{th}$ value in the $i^{th}$ sample. The standard deviation of the mean for the $i^{th}$ sample is $S_i = \sqrt{s_i^2 \over n_i}$ where $s_i = \sqrt{{\sum_{j}^{n_i} (y_{ji} - m_i)^2} \over {n_i - 1} }$ is the standard deviation for the $i^{th}$ sample.

The best estimate for the mean is $ m_{best} = {\sum_{i = 1}^{k} m_i/S_i^2 \over \sum_{i = 1}^{k} {1 \over S_i^2}}$ and the best estimate for the standard deviation of the mean is $S_{best} = ({\sum_{i = 1}^{k} {1 \over S_i^2}})^{-1/2}$. You report $m_{best} \pm S_{best}$ for your final result.

Note: The sample values mean $m_{best}$ and standard deviation $S_{best}$ are best-estimates for the unknown population values mean $\mu$ and standard deviation of the mean $\sigma_{\mu}$.

The text Data Analysis for Scientists and Engineers by Meyer provides more details.